GitBucket

4.21.2

GitBucket

4.21.2

|

• Added first draft of literature review.

• Added more notes. • Added web output figure. • Changed console output table into a figure. |

|---|

|

|

| Koli_2017/Koli_2017_Stanger.bib |

|---|

| Koli_2017/Koli_2017_Stanger.tex |

|---|

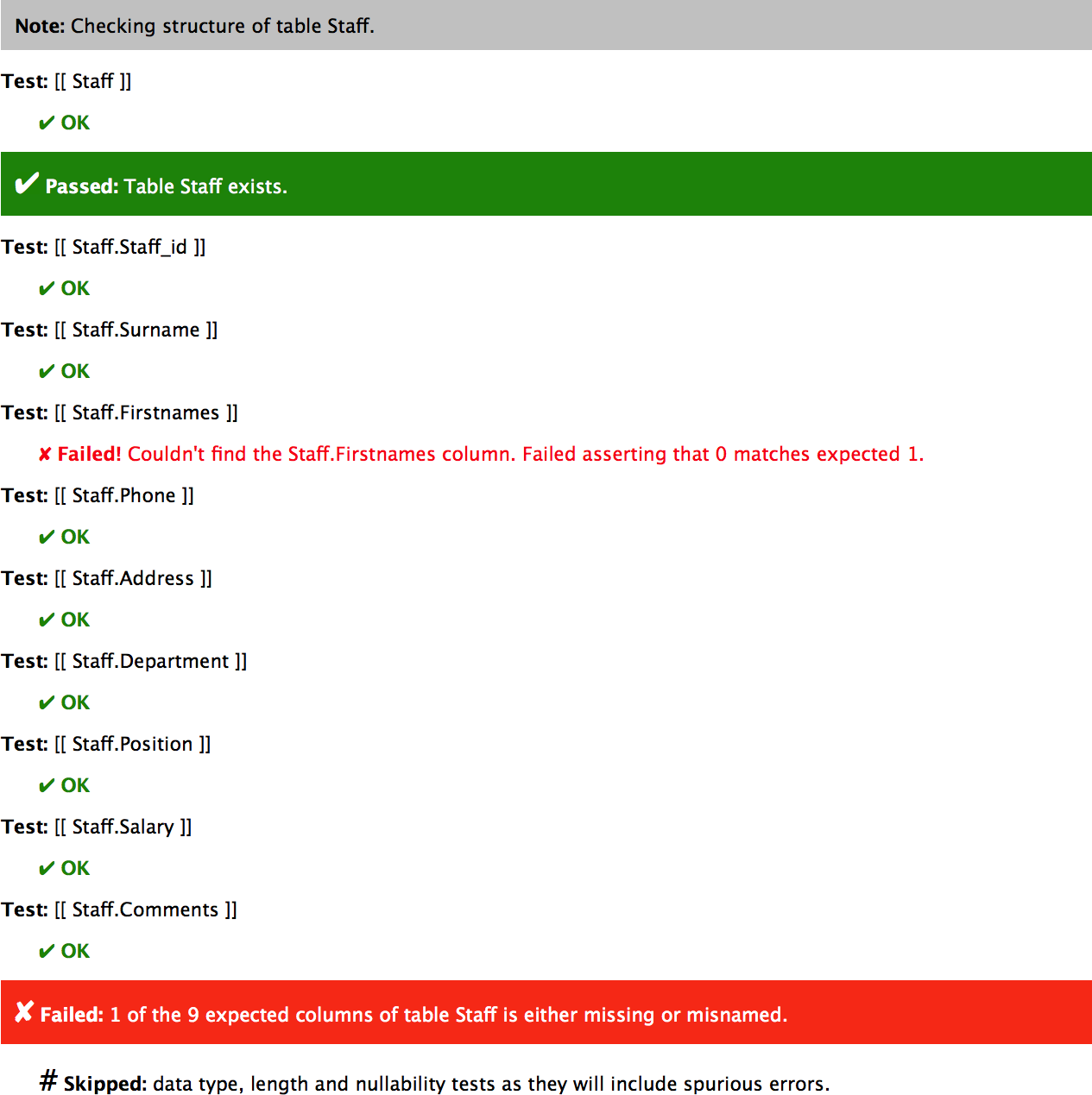

| Koli_2017/images/web_output.png 0 → 100644 |

|---|

|

|